Big data and deep learning for RNA biology | Experimental & Molecular Medicine – Nature.com

Abstract

The exponential growth of big data in RNA biology (RB) has led to the development of deep learning (DL) models that have driven crucial discoveries. As constantly evidenced by DL studies in other fields, the successful implementation of DL in RB depends heavily on the effective utilization of large-scale datasets from public databases. In achieving this goal, data encoding methods, learning algorithms, and techniques that align well with biological domain knowledge have played pivotal roles. In this review, we provide guiding principles for applying these DL concepts to various problems in RB by demonstrating successful examples and associated methodologies. We also discuss the remaining challenges in developing DL models for RB and suggest strategies to overcome these challenges. Overall, this review aims to illuminate the compelling potential of DL for RB and ways to apply this powerful technology to investigate the intriguing biology of RNA more effectively.

Similar content being viewed by others

Leveraging big data with deep learning in RNA biology

Over the last decade, deep learning (DL) has proven to be a versatile tool in biology, aiding in multiple breakthroughs in structural biology, genomics, and transcriptomics1. The power of DL lies in its unique ability to harness the potential of big data2. Recently, big data have been rapidly accumulating in multiple domains of biology3. In particular, high-throughput experiments based on RNA sequencing (RNA-seq) have led to the generation of massive amounts of RNA biology (RB) data4. Analyzing these big biological data with DL has led to novel scientific discoveries about RNA and related biological processes. Therefore, it would be beneficial to review the current progress of DL in RB, focusing on the role of big data.

DL models are multilayered artificial neural networks that learn to generate representations of input data. These models can perform downstream tasks such as regression, classification, and generation. They have higher degrees of freedom than do conventional machine learning algorithms and thus can effectively learn representations from high-dimensional data5. This property has allowed DL models to achieve groundbreaking success in various fields, including computer vision6, natural language processing7, and structural biology8. Constructing such effective DL models requires sufficiently large datasets. However, the availability of such datasets is often a major bottleneck. Auspiciously, the amount of biological data has exploded due to the universal use of high-throughput experiments in RB.

RB is an integrative field of biology in which biological processes involving diverse types of RNA are studied. The utilization of DL in this field has been driven by high-throughput experiments using RNA-seq. These experiments have led to the generation of large-scale biological data for systematically examining RNA-related phenomena. Among the representative examples are cross-linking and immunoprecipitation (CLIP) for protein binding analysis9,10, N6-methyladenosine-sequencing (m6A-seq) for RNA modification analysis11, DMS-seq for RNA structure analysis12, and single-cell RNA sequencing13.

Although DL is a promising approach for revealing the mechanisms underlying RNA-related biological processes, this approach is not without challenges. First, most of the popular DL architectures and algorithms have not been optimized for biological data and tasks. Second, obtaining a training dataset of sufficient size and quality is often difficult. Third, the difficulty in understanding the prediction of DL models often impedes the conception of scientific hypotheses, which requires understanding the causal relationship between the input and the output14. Nevertheless, successful examples of employing DL in various domains of RB demonstrate the feasibility of acquiring biological insights from big biological data. This goal can be achieved by selecting and optimizing adequate strategies and techniques of DL regarding the characteristics of transcriptomic data.

This review provides an introductory guide to employing DL for novel discoveries in RB. First, we review widely used large public databases for RB, focusing on their utility in building DL datasets. Next, we describe how popular DL methods can be employed to exploit and complement the characteristics of RB datasets. We then introduce the methods for encoding various types of RB data into input features and popular deep neural network architectures suitable for processing these features. The primary goal of these sections is to provide a foundational understanding for designing and training DL models that can learn robust representations of big RB data. Subsequently, we review successful applications of DL in various domains of RB. Finally, we discuss the desiderata and open challenges in applying DL to RB.

Public sources of large-scale RNA biology data

Training a DL model for RB applications starts with obtaining a suitable training dataset. Multiple de facto standard datasets for training and benchmarking exist for conventional DL applications, such as computer vision and natural language processing. However, such datasets are scarce in RB. Therefore, researchers often have to construct new training datasets by collecting data from existing public databases. When building training datasets from public biological databases, filtering, labeling, and normalizing the experimental data using metadata are essential. Metadata are collections of sample or experiment-associated information, often including experiment type, experimental group, sample type, organism, health status, and sequencing platform metadata15,16. Most public biological databases provide metadata, but their format, stringency, and completeness vary widely. In this section, we review such public databases for RB, focusing on experimental data types and metadata (Table 1).

GEO and SRA

The Gene Expression Omnibus (GEO) is a public repository of gene expression data, including RNA-seq and microarray data, managed by the NCBI17. As of January 2024, GEO contains expression data for more than 6.97 million biosamples, including more than 1.86 million RNA samples. One representative example of public data archived in the database is the National Institutes of Health (NIH) Roadmap Epigenomics Project, which provides 111 reference human epigenomes with gene expression profiles18. Since GEO does not store raw data from high-throughput sequencing experiments, the data are archived in the Sequence Read Archive (SRA). SRA was established to provide a public archive of high-throughput sequencing data in conjunction with GEO19. As of January 2024, SRA has hosted more than 10.9 million publicly available RNA experiments.

While GEO serves as a unique and irreplaceable data source for RB researchers, one of its limitations is its lenient control over metadata items and terms, resulting in the incoherence and fragmentation of metadata20,21. Aliases and missing metadata fields often hamper the automated filtering and labeling of experimental data. While there have been several efforts to mitigate this issue21,22, improving the integrity of GEO metadata remains a challenge. Moreover, ensuring the quality of the data and analysis pipeline is primarily the responsibility of the submitters, introducing a potential source of data inconsistencies and imperfections when compiling a training dataset from GEO. Therefore, while GEO is an unparalleled source of RB data, cautious filtering, validation, and normalization are required to assemble a training dataset from the database.

ENCODE

The Encyclopedia of DNA Elements (ENCODE) project, driven by the ENCODE consortium, which is organized and funded by the National Human Genome Research Institute (NHGRI), provides a wide range of functional genomics data23. In the third phase of ENCODE (ENCODE 3), the quality and quantity of public functional genomics were improved by adding 5992 experiments, including 170 eCLIP experiments, 78 RBNS experiments, 155 RAMPAGE experiments, 198 miRNA/small RNA-seq experiments, and 340 total/poly(A) RNA-seq experiments24. Moreover, ENCODE contains tissue expression data (EN-TEx), which include gene expression profiles and 15 functional genomics assay data from 30 human tissues25. One of the most prominent features of the database is that it enforces standardized experiment-specific quality control methods for both the data and the metadata26. The experimental data are audited, and audit flags are placed on a variety of potential imperfections in the data. Moreover, the use of controlled fields and terminologies for each metadata entry is enforced. ENCODE also provides unified analysis pipelines for multiple types of RNA experiments, improving the reproducibility of the analyses. Another notable feature of ENCODE is that it supports a powerful filtering functionality, allowing researchers to select data based on the assay type, target gene, target organism, cell line, sequencing platform, and many other features. Therefore, ENCODE is an essential source of quality-controlled data for constructing training datasets for functional genomics DL models.

ArrayExpress & ENA

ArrayExpress is a public archive for functional genomic data managed by the European Bioinformatics Institute (EBI)27. This archive includes 46 types of functional genomics data, including 13,988 RNA-seq studies and 51,250 array studies. The submitted data are enforced to meet minimal metadata requirements and are manually curated by bioinformaticians, but the database does not provide detailed audit information. The raw data from high-throughput sequencing experiments in ArrayExpress are archived in the European Nucleotide Archive (ENA). The submitted data are required to meet quality standards. The ENA manages the metadata to ensure that the minimal standards are met using controlled fields and vocabulary28.

FANTOM

The Functional Annotation of the Mammalian Genome (FANTOM) consortium aims to improve the understanding of the gene regulation network29. The FANTOM database provides atlases of gene regulatory elements and noncoding RNAs30,31. The database primarily hosts RNA-seq and cap analysis of gene expression (CAGE) data. CAGE accurately maps transcription start sites (TSSs) by pulling down 5’ caps32. The current phase of the project, FANTOM6, is focused on characterizing the global regulatory effect of human lncRNAs33.

GTEx, TCGA, and ICGC

Genotype–Tissue Expression (GTEx) is a project initiated by the NIH to provide RNA-seq data for various human tissues associated with genomic sequences and map tissue-specific and global expression quantitative trait loci (eQTL)34. The completed GTEx v8 project encompasses 54 tissue types of human adults, and the developmental GTEx (dGTEx) project, which is ongoing, includes tissue samples from infants and juveniles. The Cancer Genome Atlas (TCGA), managed by the National Cancer Institute (NCI), also provides RNA-seq data from various human tissues, both normal and cancer35. Along with standard RNA-seq data, TCGA provides miRNA-seq data from more than 1800 samples. Therefore, TCGA can provide a potential source of training data for investigating the miRNA landscape and regulatory network. Statistical data, such as read counts and normalized expression levels, are publicly available in both the GTEx and TCGA databases. In contrast, raw sequence data are available only for researchers authorized by the NIH Database of Genotypes and Phenotypes (dbGaP). A collaboration between TCGA consortium and the International Cancer Genome Consortium (ICGC) led to the Pan-Cancer Analysis of Whole Genomes (PCAWG) project. The sequencing effort included 2793 cancer patients across 20 primary sites, and RNA-seq data were obtained from 1222 patients36. These databases serve as important data sources when constructing training datasets for tasks involving differential gene expression regulation, eQTL, or cancer-associated gene expression regulation.

Characteristics of RNA biology data and related deep learning methods

It is ideal to have a perfectly curated and sufficiently large dataset to train a DL model. However, obtaining such a dataset is not always possible, and adopting this approach is especially challenging in biology due to the relatively high cost of data production. Indeed, there have been multiple efforts to construct comprehensive biology databases, including GEO, SRA, and ENCODE, as reviewed in the previous section. However, such databases often suffer from limited metadata integrity20,21. Therefore, researchers often have to mitigate this issue when building training datasets for DL models in RB. A critical step in mitigating training dataset imperfection is to select an appropriate method for training DL models. Here, we review popular DL methods and describe their utility in leveraging RB data.

Supervised learning

The term paradigm in machine learning corresponds to the classification of learning methods based on the input data and task37. Supervised learning utilizes labeled data to train a model to accurately predict the labels in the training set, whereas unsupervised learning trains a model to efficiently represent the input data without using labels. The combination of the two methods is termed semi-supervised learning, in which a model is simultaneously trained on large unlabeled datasets and small labeled datasets38. Supervised learning is the dominant choice among these paradigms since it can be directly applied to various downstream prediction tasks. Many predictive applications of DL in RB have also adopted supervised learning, e.g., miRNA target prediction, gene expression prediction, RBP binding prediction, ncRNA biogenesis prediction, and RNA modification prediction.

Supervised learning provides a straightforward framework for DL, but it is not without limitations. Supervised learning usually requires a sufficient amount of reliable labeled data, which is not always available, especially in RB39. Specifically, uncontrolled metadata in public RB databases impede the construction of labeled training datasets. Moreover, supervised learning can easily let DL models pick up biases in data labels, often introduced by experimental artifacts or data analysis pipelines. Even after standard filtering procedures, biological noise in experimentally generated data labels can complicate the training of DL models using supervised learning. Therefore, training a model to learn biological knowledge with supervised learning can occasionally be challenging. In such cases, exploring other DL paradigms can be beneficial.

Self-supervised learning

Labeling data for supervised learning is often expensive and time-consuming, and the amount of labeled data is less than the amount of unlabeled data in most domains. This problem can be overcome by leveraging unsupervised learning. However, unsupervised learning methods are applicable only to limited types of RB tasks, such as cell clustering and substructure detection40,41. To address this limitation, a self-supervised learning paradigm has evolved. In self-supervised learning, a model is trained on unlabeled data as in unsupervised learning. However, the model is trained with a supervised learning objective by generating labels from unlabeled data itself42,43. This paradigm has gained momentum in natural language processing applications through the success of large language models7,43.

To employ self-supervised training in practice, a model is pre-trained on a large unlabeled dataset in a self-supervised manner, learning generalizable knowledge such as language structure. Then, the pre-trained model is fine-tuned to perform a specific downstream task using a smaller labeled dataset in a supervised manner. For example, scBERT was pre-trained on unannotated single-cell transcriptome data to predict the expression of randomly masked-out genes. Then, the model was fine-tuned to annotate and discover cell types44. Several other studies have applied a self-supervision framework for RB tasks, including disease modeling45, small molecule–miRNA association prediction46, and RBP prediction47,48. The success of these examples demonstrates the feasibility of learning biological context using massive amounts of unlabeled data. Therefore, self-supervised learning offers promising potential for deciphering the complex context of the transcriptome.

Domain adaptation

Training DL models for RB is often obstructed by the scarcity of training data. This issue is not unique to RB49. Multiple DL methods have been proposed for resolving this issue. In some cases, the scarcity of training data in the desired domain can be overcome by leveraging data from other domains. Domain adaptation is a DL technique for such cases in which a model is trained to capture domain-invariant knowledge without using the target domain label50,51. In biology, the domain concept may correspond to different biological levels, organisms, cell lines, or batches of experiments. For example, scDEAL was initially trained with bulk-level cancer drug response prediction tasks using bulk RNA-seq data. Then, domain adaptation was employed to transfer the learned knowledge for single-cell-level drug response prediction tasks using scRNA-seq data52. Several other DL studies in RB have used domain adaptation methods to mitigate the scarcity of training data in the target domain, including isoform function prediction53, transcription factor (TF) binding site prediction54, and single-cell RNA-seq (scRNA-seq) data classification55. Therefore, domain adaptation can be useful in RB when generating training data is too costly, but related data in another domain are available.

Meta-learning

Meta-learning, or ‘learning-to-learn’, is a collection of methods that allow a model to improve its ability to learn new tasks. In meta-learning, the model is trained on multiple tasks by iterating over each task to learn the knowledge that is generalizable to all the trained tasks as well as new tasks that it can encounter in the future. Meta-learning techniques are instrumental in few-shot tasks, where a model has to make predictions about classes that have only a few examples in the training set56,57. There have been efforts to employ meta-learning for RB since limited training data are a common issue. For example, MARS was trained on annotated and unannotated single-cell transcriptomes from multiple tissues. A meta-learning framework was employed to train a model that can annotate single-cell transcriptomes of novel tissues containing cell types that were not encountered during training58. Other successful examples of these methods include cancer survival prediction via gene expression59, ncRNA-encoded peptide (ncPEP) disease association prediction60, and lncRNA localization prediction61.

Data augmentation

Data augmentation is a popular technique used in data-limited settings, in which diverse transformations are applied to input data to generate additional synthetic examples62. Ideally, the transformations should not affect the task outcome. The simplest forms of augmentation include cropping, rotation, flipping, resizing, recoloring, and blurring. However, such transformations are not always directly applicable to RB data. Instead, reverse complementing the nucleic acid sequence, shifting the sequence, or adding single-nucleotide insertions can be employed for RB data augmentation. Data augmentation has been used in various deep models for RB tasks, including coding potential prediction63 and gene expression prediction54.

Ensemble

Ensemble learning is another technique that has been shown to improve the model performance when training data are limited. In ensemble learning, multiple DL models are combined via various methods, such as training multiple models with sampled data and voting, choosing the best model for each example, and training a model to combine the outputs of multiple models64,65. Multiple RB studies, including RBP motif prediction66, lncRNA identification63,67, splicing variant prediction68, and RNA modification detection via nanopore sequencing69, have leveraged ensemble techniques.

In summary, while supervised learning is a dominant paradigm of DL in RB, its utility is often limited by the availability of experimentally generated labels. To overcome this limitation, various methods, including self-supervised learning, domain adaptation, meta-learning, data augmentation, and ensemble, can be utilized. The potential of these methods can be leveraged for RB studies by selecting an appropriate method for a given task after analyzing the characteristics and imperfections of training data.

Encoding RNA biology data into deep learning input features

DL models require numeric multidimensional arrays, termed tensors, as inputs to extract task-relevant features through matrix multiplication and nonlinear operations. However, many biological data are not provided in such forms. Thus, to utilize DL models in RB, it is crucial to transform these biological data into tensors through a process called encoding. Choosing a suitable encoding technique is cardinal to training a robust DL model. If an encoding method reflects too much information specific to a sample or an experiment, the DL model will fail to generalize. Conversely, the model will fail to learn if an encoding method removes too much information from the original data. Therefore, the essence of encoding is to efficiently represent the generalizable properties of the original data relevant to a given task. In the following, we present representative encoding techniques for RB data that have been successfully utilized in multiple studies.

Nucleic acid sequences

Several types of biological data involving RNA can be leveraged to predict biological processes, including gene expression, RBP binding affinities, and regulatory elements. Among them, nucleic acid sequences are the most widely used data type since, in principle, numerous biological properties can be predicted solely from sequence data. The most common way of encoding nucleic acid sequences is to one-hot encode the bases, which may allow the DL model to automatically capture relevant features (Fig. 1a). In this simple one-hot encoding approach, each base is represented by a binary vector with a single 1, the position of which represents each nucleotide base. For instance, base A is encoded as [1, 0, 0, 0], and base C is encoded as [0, 1, 0, 0]. Moreover, some studies further improved simple mononucleotide one-hot encoding by integrating matrices corresponding to dinucleotides, trinucleotides, purines, pyrimidines, strong hydrogen bonds, weak hydrogen bonds, amino groups, and ketone groups70,71. Other studies have enriched the encoding through one-hot encoding of the k-mers in sliding windows66 and one-hot encoding of the pairwise alignment results72.

a Nucleic acid sequences can be encoded using one-hot encoding, word2vec, embedding layers, or k-mer counts. b When encoding the gene expression data, the normalized expression level or rank vector can be used as an input feature. Gene correlation matrices are also often used to capture gene‒gene interactions. c RNA structure data can be represented using the minimum free energy (MFE), secondary structure matrices, base-pairing probability matrices, or 3D point clouds. d RNA–protein interactions can be represented by counting known binding motifs or encoding CLIP results as binary binding site tensors or continuous coverage tensors. RNA‒RNA interactions can be encoded as 3D matrices to convey base pairing information.

Although one-hot encoding is the most common method for encoding nucleic acid sequences, many studies have adopted techniques from natural language processing to produce more informative encodings of nucleotide sequences. By considering biological sequences as sentences and k-mers in the sequence as words, biological sequences can be encoded using the natural language encoding technique termed word2vec73. In word2vec, each word is represented by a large vector called a word embedding. A word embedding is learned by either predicting a word of interest from the surrounding words or predicting surrounding words from a word. Several studies have used word2vec to encode sequence data in RB to improve the performance72,74,75,76. Word2vec is generalized by expanding the subject from a word to a paragraph. This technique, named doc2vec77, was also adopted in RB to generate embeddings for the whole gene or miRNA sequences78. Word embeddings can also be generated during training time by randomly initializing embeddings and updating these vectors via a neural network layer called the embedding layer. This method can generate word embeddings that are more relevant to the objective of the model. For example, m6Anet employs this scheme to generate motif embedding vectors79. In addition to the encoding methods mentioned above, the numbers of nucleotide monomers and polymers were also used to encode sequence data80,81.

Expressions

Expression data are important input features since the expression levels of regulatory genes and landmark genes are correlated with diverse biological processes. These data are primarily derived from RNA-seq and CAGE. The simplest method for encoding expression data is to use normalized expression values from RNA-seq data82,83 (Fig. 1b). Aptardi extended this simple encoding method by considering expression value fluctuations in a local region. Specifically, the authors partitioned the region into three equal-sized bins and calculated the differences in expression values between neighboring partitions81. Other studies, such as DMIL-IsoFun and HiCoEx, encoded global interactions of expression data with a 2-dimensional matrix84,85.

Structures

RNA structures are fundamental data for investigating the interaction, functions, and stability of RNAs. Many DL methods utilize the RNA structure in various ways80,82 (Fig. 1c). The simplest way to encode the RNA structure is the minimum free energy (MFE)86,87. However, as a scalar value, the MFE conveys limited information, and DL models may benefit from more information-rich vector representations of RNA structures. There are several methods for encoding RNA structure data as a 1D tensor. For instance, the nucleotide-wise probability of structural contexts, such as hairpin loops, inner loops, and multiloops, can be used to obtain a continuous or binarized encoding tensor of RNA structures88,89. Furthermore, the frequency and distance of these structural contexts can be added to the encoding86. Apart from encoding structural contexts, the 3D coordinates of each atom or nucleotide in a tertiary structure can be encoded as a 1D tensor90. The RNA structure can be encoded as a 2D tensor of pairwise binding probabilities72,85,91.

Bindings

Another type of data frequently used in DL for RB is the binding between RNA and regulatory molecules, including TFs, miRNAs, and RBPs, which play crucial roles in biological processes (Fig. 1d). One simple method for encoding protein‒RNA/DNA binding data is to count the known binding motifs in the region of interest80,92. However, this method does not accurately reflect the in vivo interactions between biological molecules. Several studies utilized in vivo binding data derived from CLIP data to address this issue. To encode the information from CLIP data, coverage vector93 and binarized binding matrix94 were used. Unlike protein–RNA interactions, RNA–RNA interaction data are often encoded as multidimensional tensors to convey base-pairing information. One way to encode miRNA‒target interactions is via a 3D matrix, in which the first two dimensions represent the miRNA and target sequence positions, and the last dimension represents the one-hot encoded combination of miRNA bases and target bases95.

Deep learning architectures for leveraging RNA biology data

The DL architecture is the structure of neural networks, including connections between layers and operations in each layer. According to the universal approximation theorem, a neural network with a single sufficiently large hidden layer and a nonlinear function can approximate any continuous function96. However, such shallow architectures usually fall short in practical tasks, as they fail to learn or require too many neurons. Nevertheless, such problems have been alleviated with improved architectures. For instance, before the introduction of an efficient architecture named the convolutional neural network (CNN) by LeCun et al.97, DL was not widely applied to images due to the inefficiency and suboptimal performance. After it was introduced, the CNN architecture was widely adopted in computer vision tasks and exceeded human-level performance6. Therefore, choosing a suitable architecture that can work efficiently with a given data type and task is crucial. Here, we review DL architectures that have been shown to work effectively with various types of biological data and tasks.

Multilayer perceptrons

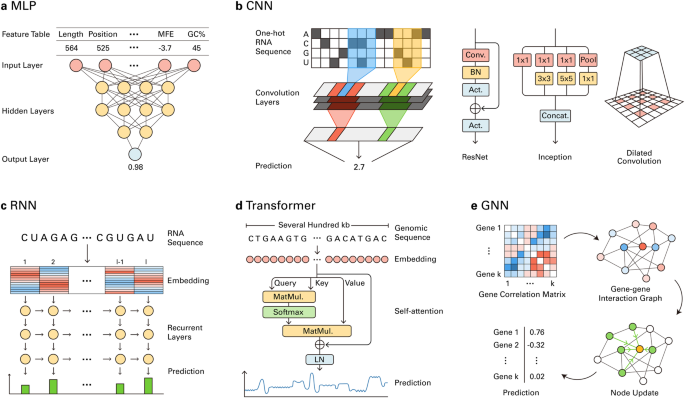

The multilayer perceptron (MLP) is the simplest form of a DL model and comprises multiple hidden layers of neurons (Fig. 2a). Each neuron receives input from other neurons from the previous layer, performs linear transformation, and applies a nonlinear activation function. During training, the weight of each neuron is iteratively updated via backpropagation, which involves the computation of the gradient of the loss function for each training example98. MLPs can learn to generate informative vector representations of the input data, which allows them to perform predictive tasks such as regression and classification. Several early DL models in RB have been developed based on the MLP architecture for various tasks, including lncRNA prediction, gene expression prediction, tissue-specific alternative splicing prediction, and structure-based RBP binding site prediction80,99,100. Another neural network architecture similar to the MLP is the deep belief network (DBN), where each layer is initially trained individually and then trained together to construct and train a deeper neural network101. The DBN has been utilized for lncRNA identification99, tumor clustering40, and RBP binding site prediction102.

a MLPs can make probabilistic predictions from biological feature tables. b CNNs can predict biological features from one-hot-encoded biological sequences by capturing local patterns. ResNet, Inception, and dilated convolution can improve the performance and increase the input size. c RNNs can process embeddings of RNA sequences to provide basewise predictions of biological features. d GNNs can operate on gene–gene interaction networks derived from gene correlation matrices to predict genewise biological features. e Transformers can capture long-range interactions from genomic sequences of several hundred kilobases using multi-head self-attention.

Convolutional neural networks

The local patterns and interactions observed in tensor encodings of biological data, such as one-hot sequences, are fundamental features that are relevant to biological processes. For instance, the motifs for regulatory elements can be discovered by examining local interactions on DNA sequences, and RBP binding motifs can be discovered from local interactions of RNA sequences. Among DL architectures, CNNs have achieved robust performance in learning and aggregating local interactions97,103. Inspired by this success, CNN-based models have been frequently utilized for DL in RB. CNNs process data using weight matrices called convolution kernels, whose elements are randomly initialized (Fig. 2b). The core of the CNN is the convolution layer, which can be understood as the convolution kernels sliding across the input tensor. In each sliding step, the sum of the elementwise product for each kernel and each patch of the input tensor is calculated. By iterating through this process, the kernels can be updated to capture task-relevant local interactions in the input data. When biological sequences are used as inputs, convolution kernels can function as automatically learned motif detectors104. Hence, the natural use of CNNs is to detect local motif-related features such as regulatory elements105,106,107, protein–nucleic acid interactions108, nucleic acid–nucleic acid interactions95, 5′ UTR strength prediction109, and polyadenylation (poly(A))70,110. The CNN architecture has outperformed the classical machine learning and MLP architectures in various RB tasks. Several papers benchmarked the CNN and other architectures for specific RB tasks and demonstrated the robust performance of the CNN111,112.

Sophisticated biological properties require models to capture long-range interactions. This can be achieved by stacking additional convolution layers. However, simply adding more layers to the CNN results in suboptimal performance since the increased distance between the input and the output impedes the propagation of the gradient through the model. To overcome this limitation, He et al. proposed ResNet6, which utilizes skip connections to connect the input directly to the deeper layers. This architecture enables the stable training of the deep CNN models. ResNets have been used for DL in RB to capture longer interactions among captured motifs on biological sequences or data to predict splicing113,114, regulatory activities105, and N6-methyladenosine (m6A) modification115. ResNets can capture longer interactions than can simple CNNs but usually struggle to capture interactions between elements thousands of bases apart, such as splicing donors and receptor sequences. Therefore, several RB tasks, such as splicing prediction, require additional methods to capture long-range interactions. This problem can be addressed by adopting dilated convolution. In dilated convolution, each element of the kernel is applied to every n-th element of the input, increasing the range in which interactions can be captured116. Dilated convolution has been used in various RB tasks that require capturing long-range interactions54,105,113,115. In addition, integrating multiple convolution layers of different sizes, as suggested in the Inception architecture, can improve the performance of CNNs by allowing them to capture interactions of diverse sizes117. The idea was adopted in DeepExpression to predict gene expression from sequences107 and in CUP-AI-Dx to classify metastatic cancers using RNA-seq118.

Recurrent neural networks

The natural analogy between a convolutional kernel and a motif detector has led many researchers to use CNNs to process biological sequence data. However, it is common in natural language processing to process sequence data with recurrent neural networks (RNNs)119. RNNs process input sequences recursively in order (Fig. 2c). In each recursion, the recurrent unit receives information from the prefix of the input sequence, processes the information, and passes it to the next unit. This recursive process results in the intrinsic ability of RNNs to capture interactions among sequence elements120, such as cis-regulatory elements. However, in practice, RNNs exhibit suboptimal performance when processing long input sequences. For long inputs, an extended number of recursion steps can lead to excessive accumulation or forgetting of past information, which leads to a failure to learn. Long short-term memory (LSTM) addresses this problem by automatically learning what proportion of information to forget or remember from the past sequence121. In RB, several studies have utilized LSTM or similar architectures, such as gated recurrent unit (GRU)122, to predict miRNA–gene associations78, poly(A) sites81, coding potentials63, and differential gene expression123. Notably, while most RNN models for natural language processing operate in a single direction, many RNN models for RB utilize bidirectional LSTM (BiLSTM)124 to exploit the bidirectional nature of genomic sequences and biological interactions. In addition, an RNN can be hybridized with a CNN, which, in principle, allows the CNN to detect local features and the RNN to capture higher-level interactions between captured features. This approach is also often used in RB for tasks such as alternative splicing prediction125, isoform function prediction53, RBP binding-altering variant identification126, and poly(A) site prediction127. A benchmark study demonstrated the superior performance of an RNN/CNN hybrid over a CNN or an RNN in RBP binding prediction128.

Transformers

Both CNNs and RNNs have intrinsic limitations when processing sequence data: they can only capture long-range interactions indirectly through multiple layers or steps. This prevents CNNs and RNNs from learning the dynamic context of the sequence. To overcome these limitations, Vaswani et al. introduced the transformer architecture129. The transformer directly captures long-range interactions among sequence elements by using the self-attention mechanism (Fig. 2d). Self-attention captures every possible pairwise interaction between every sequence element using an attention matrix. Each weight of the matrix represents the relevance of each pairwise interaction to the task. During training, the weights of the attention matrix are updated to reflect the sequence context. Hence, transformers can better capture long-range interactions and dynamic contexts in sequences than CNNs and RNNs. Numerous studies have demonstrated the superiority of transformers in processing natural language, images130, and even biological data8. In RB, the transformer architecture has been applied to poly(A) signal prediction131, gene expression prediction132, cell type annotation44, network-level disease modeling45, and circRNA–miRNA interaction prediction133.

Graph neural networks

Several RB data, including contact information, structure, coexpression, and base pairing data, can be represented as graphs instead of sequences. Representing biological data as a graph allows a flexible representation of interactions between biological entities. Graph neural networks (GNNs) capture information from such graph-type data. In a GNN, a vector corresponding to each node is updated by aggregating the information from connected nodes (Fig. 2e)134,135,136. The GNN can learn to capture interactions between nodes by repeating such updates137. In RB, GNNs have been applied to predict isoform function from isoform association graphs84, to predict RBP binding sites from graph representations of RNA secondary structures91, to integrate gene interaction networks and other biological networks138 and to predict gene coexpression from gene contact graphs derived from Hi-C data85.

Applications of deep learning in RNA biology

We have introduced crucial factors in developing DL models for RB, including large-scale data sources, encoding techniques, paradigms, and architectures. In this section, we demonstrate how these factors collectively contribute to building effective DL models for RB by reviewing specific examples. We review successful DL models that have achieved robust performance in important RB tasks or have showcased the potential of DL for biological discoveries (Fig. 3). By reviewing these models, we not only demonstrate the competence of DL in RB research but also suggest good practices for designing and training DL models that effectively leverage biological data.

DL models provide insights into diverse biological processes involving RNA. In epitranscriptomics, DL models can predict and identify RNA modification sites. To understand pre-mRNA processing, DL models can predict alternative splicing and alternative polyadenylation sites, as well as isoform functions. In ncRNA biology, DL models can identify ncRNA targets, predict coding potential, and identify lncRNA precursors. To understand the biology of RBPs, DL models can predict RBP binding sites and protein‒RNA complex structures. To understand gene expression regulation, DL models can predict gene expression levels, coexpression, and regulatory elements. For medical applications, DL models can diagnose diseases, predict RNA degradation, and predict gene editing efficacy.

Noncoding RNAs

Noncoding RNAs (ncRNAs) are essential layers of transcriptional, posttranscriptional, and translational gene regulation and are crucial for tissue functions and developmental programs139,140. Diverse types of ncRNAs have been associated with cancer and genetic diseases141,142. One of the most representative types of ncRNAs is microRNAs, which are short RNAs produced from RNA hairpins. miRNAs are loaded into Argonaute (AGO) proteins and form RNA-induced silencing complexes (RISCs), which target mRNAs through complementary base pairing and reduce their expression143. The development of a model that can accurately predict the genome-wide regulatory effect of an arbitrary miRNA is a core task in RB that can enable a network-level understanding of miRNA-mediated gene regulation and pave the way for miRNA-based therapeutic development144. Several attempts to construct such a model using classical machine learning and DL algorithms have been made, underscoring the importance of the task. Among these models, TargetScan is the current state-of-the-art model. This model has achieved significantly improved accuracy in predicting miRNA targeting efficacy compared to that of previous machine learning methods based on thermodynamic models or correlative approaches95. TargetScan utilizes a CNN-based module to predict the affinities between an AGO–miRNA complex and target mRNAs. To alleviate the scarcity of experimental affinity data, the authors generated a large affinity dataset through AGO-RNA bind-n-seq (AGO-RBNS). The trained affinity predictor model was combined with a biochemical model to predict the repression of individual mRNAs. The model was more accurate than the previous versions of TargetScan, which did not use DL. Although TargetScan achieved robust performance in predicting miRNA targeting efficacy, it still explained only a small fraction of the variability in resulting mRNA expression changes from miRNA transfections. Therefore, this biologically important task needs further improvement, possibly through a more sophisticated approach to utilizing DL.

Long noncoding RNAs (lncRNAs) are ncRNAs longer than 200 nt. They bind to DNA, RNA, and proteins and regulate gene expression via various mechanisms, including chromatin remodeling, gene silencing and activation, nuclear organization, and RNA turnover regulation139. lncRNAs are transcribed from various sources, including intergenic regions, introns, antisense strands, and enhancers. Accurately distinguishing lncRNAs from mRNAs is an important challenge in RB but is often complicated by the presence of various isoforms145. Moreover, it is equally important to predict the functions of identified lncRNAs. To address both tasks, LncADeep utilizes separate modules for each task99. The DBN-based lncRNA identification module was trained on annotated transcript sequences to learn the features distinguishing lncRNAs from mRNAs. The MLP-based functional annotation module was trained on lncRNA‒protein interaction data from the NPInter database146. The functional annotations of the identified lncRNAs were made through pathway enrichment analyses of the predicted lncRNA-binding proteins via pathway databases, KEGG147 and Reactome pathway148. An average of 25 KEGG and 67 Reactome pathway annotations were associated with each identified lncRNA, indicating the complexity of lncRNA functions. The resulting model outperformed previous models in both tasks. While lncRNAs pose a unique challenge in RB due to their diverse regulatory mechanisms and sequence similarity to mRNAs, studies have shown that DL can be leveraged to elucidate the complex biology of lncRNAs.

Circular RNA (circRNA) is a subtype of lncRNA, which can accumulate in specific cell types due to the increased stability rendered by a closed ring structure. These accumulated circRNAs often act as sponges for miRNAs and RBPs, inhibiting their regulatory function and adding another layer of posttranscriptional gene regulation149. By modulating posttranscriptional gene regulators, circRNAs are associated with various diseases, such as cancer150. Therefore, identifying circRNAs is an important task. Distinguishing circRNAs from linear lncRNAs based on their sequences is challenging because the circularization process can often be inferred not from the transcript sequence itself but from the surrounding genomic context. This difficulty is reflected in the suboptimal performance of methods that distinguish circRNAs from other lncRNAs. To develop a more accurate circRNA identification model, circDeep uses reverse complement matching and conservation information from flanking regions of input sequences74. The circDeep architecture is a hybrid CNN-BiLSTM model that captures both local interactions and global interactions in input sequence features. This model was trained on 32,914 human circRNAs from the circRNADb151 and other lncRNAs from GENCODE152 to learn the features distinguishing circRNAs from other lncRNAs. Since circRNAs are not fully annotated in standard annotations such as GENCODE and Ensembl, it is necessary to refer to specialized databases such as circBase to construct circRNA-related training datasets153. The resulting model significantly improved the accuracy of the identification of circRNAs.

The utilization of DL to identify or distinguish major species of ncRNAs has significantly improved the accuracy compared to the previous machine learning methods (Table 2 and Supplementary Table 1). Nevertheless, functional annotation of identified ncRNAs still depends heavily on traditional enrichment analyses and thus remains a challenge for DL. To discover the specific functions or biological roles of ncRNAs, adequate DL methods, such as GNNs, which can learn from pathways or interaction networks involving ncRNAs, could be adopted.

Epitranscriptomics

Epitranscriptomics is a field of RB studying various RNA modifications, which are crucial components of posttranscriptional gene expression regulation. RNA modification affects splicing, poly(A), mRNA export, RNA degradation, and translation efficiency154,155. Through these biological processes, RNA modification is associated with cell differentiation, cancer progression, and neurological development156,157. Therefore, understanding the functions and regulatory mechanisms of RNA modification is a significant objective in RNA biology. Investigating the biology of RNA modifications requires profiling the deposition of RNA modifications at the transcriptomic scale. For this purpose, high-throughput experimental methods, including m6A-seq11, miCLIP158, and bisulfite-seq159, have been developed. Although these methods enable systematic investigations of epitranscriptomic landscapes, they often suffer from low resolution, high false positive rates, motif biases, and low concordance between experiments160. To overcome this limitation, researchers have applied DL methods to profile the epitranscriptomic landscape. Transcriptome-wide prediction of RNA modifications via DL can improve the understanding of posttranscriptional gene regulation by elucidating the underlying mechanisms, associated variants, and phenotypic effects of RNA modifications161.

One approach to this objective is to train DL models that predict RNA modification sites from transcript sequences. This approach has been applied to various RNA modifications, including m6A115, 5-methylcytosine (m5C)162, pseudouridine163, and 2′-O-methylation164. One notable example is iM6A, which learned contextual information around the experimentally validated m6A modification sites with a ResNet-based model115. The inputs were pre-mRNA sequences with 5000 flanking nucleotides, and the labels were generated based on m6A sites validated through m6A-CLIP experiments. The iM6A model outperformed classical machine learning methods in predicting m6A modifications and generalized well to the sites validated with m6A-label-seq, MAZTER-seq, m6ACE-seq, and miCLIP2. By analyzing the model, the authors hypothesized that m6A-associated variants accumulate 50 nt downstream of the m6A site. They validated this hypothesis by analyzing an independently generated experimental dataset. They also showed that previously known pathogenic single-nucleotide variants (SNVs) are associated with changes in m6A deposition and suggested that codon usage may affect m6A deposition. While many RNA modification prediction models focus on predicting a single type of modification, multiRM predicts twelve widely occurring modifications with a single model76. multiRM was trained on twenty epitranscriptome profiles generated from fifteen different technologies. The model utilizes three embedding schemes: a 1D convolution, a hidden Markov model, and word2vec. The embedding vectors generated by each scheme were fed into LSTM and attention methods to learn interactions among features relevant to different modifications. Analyses of attention matrix weights revealed modification motifs that were highly concordant with known RNA modification motifs. Moreover, the attention weights of different types of modifications were strongly correlated, indicating crosstalk among different modifications, as reported in previous studies. The above studies demonstrate the feasibility of elucidating the mechanisms regulating the epitranscriptomic landscape by developing DL models that predict the deposition of RNA modifications.

Another approach for applying DL to profile modification depositions is to develop a DL model that captures modification signatures from direct RNA sequencing (DRS) data. DRS is a technique in which native RNA is sequenced without the need for reverse transcription165. In this method, the electrical current changes during the translocation of an RNA molecule inside a nanopore are measured to infer nucleotide identity. Since canonical and modified RNA bases cause different electrical current shifts, DRS can be used to identify modified bases via DL166. m6Anet identifies m6A modifications on transcripts with DRS data79. m6Anet uses an embedding layer to encode 5-mers, and predicts the modification probability of each site using an MLP. The model first predicts the probabilistic measure for each read and then determines the site-level probability from these measures. This technique is called multiple instance learning. m6Anet performed better than previous methods and generalized well to other cell lines and species. In addition to m6A, Dinopore predicts adenosine-to-inosine (A-to-I) editing from DRS data69. Dinopore was constructed based on ResNet and uses multiple branches with various convolutional filter sizes to capture local interactions with different spans. This method outperformed previous methods and showed robust interspecies generalizability. As shown above, the development of DL models that accurately predict RNA modifications has enabled researchers to systematically study the regulatory elements relevant to RNA modifications and phenotypic or disease consequences of RNA modifications (Table 3 and Supplementary Table 2). Integrating diverse types of transcriptomic data, such as gene expression, RNA structure, and RBP binding data, will facilitate the development of multimodal DL models that learn systematic knowledge of the epitranscriptome, expanding the understanding of posttranscriptional regulation.

RNA-binding proteins

RNA-binding proteins (RBPs) control various aspects of gene expression regulation, including mRNA decay, mRNA-ncRNA interaction, RNA modification, translation efficiency, and RNA processing167. The main challenge in RBP biology is to model the binding preferences and predict the binding sites of RBPs, which are crucial for understanding posttranscriptional and translational regulatory mechanisms. To address this challenge, RBP binding prediction models have been commonly trained using experimental data from RNAcompete168 and CLIP-based experiments9, such as HITS-CLIP169, PAR-CLIP170, and eCLIP171. DeepBind is the foundational research for utilizing DL in RBP biology104. In DeepBind, a CNN motif detector predicts protein-binding motifs from genomic sequences, and the resulting motif feature vector is fed into feedforward layers to yield binding prediction scores. Each DeepBind model accounts for a single type of protein, and the models were trained for 194 RBPs. DeepBind achieved a robust performance and showed that the RBP motif preference knowledge learned from in vitro experiments can be generalized to in vivo transcriptomes via DL.

While DeepBind outperformed previous approaches using a motif detector, subsequent studies showed that integrating contextual information from RNA structure and sequences could improve RBP binding prediction. The biological rationale for this approach is that the binding affinity of RBPs for an RNA target is influenced not only by the local binding motif but also by the sequence composition and structural context of the target RNA172,173. Deepnet-RBP utilizes a multimodal DBN that receives primary, secondary, and tertiary structures102. The primary and secondary structures were encoded as k-mer count vectors, and the tertiary structures were encoded as structural motif indicating vectors. The secondary and tertiary structures were computationally predicted. The model outperformed classical machine learning models and predicted several potential secondary and tertiary structural motifs. Similarly, iDeepS utilized computational RNA structure prediction from RNAshape and slightly outperformed DeepBind174. However, computing the structures of RNA molecules is computationally burdensome and often inaccurate. Because of this limitation, iDeepE attempted to capture structural information from sequences surrounding the RBP binding sites66. Using two CNNs with local and global resolution filters, iDeepE outperformed previous DL models, including Deepnet-RBP.

While the above approaches use dense vector representations, molecular graphs are commonly used in biology and chemistry to model the structures of molecules, including RNA and proteins134,175. RPI-Net utilizes a GNN to learn the graph representation of the RNA structure and to predict RBP binding and outperforms previous DL models91. Moreover, the study pointed out that some CLIP techniques, including PAR-CLIP and HITS-CLIP, may introduce sequence bias in the training set and that previous machine learning and DL models picked up the bias. When building the training set for RPI-Net, the authors de-biased the PAR-CLIP data by replacing the biased nucleotides with random bases. This example shows the importance of inspecting and de-biasing biological data when training DL models.

PrismNet, one of the latest DL models for RBP binding site prediction, incorporates in vivo RNA secondary structure data produced using icSHAPE-seq instead of computationally folded structures176. In this study, the squeeze-and-excitation network (SENet) architecture, which captures global interdependencies, was employed with ResNet177. PrismNet achieved robust performance in the benchmark conducted by the authors and was applied to discover disease-associated SNVs that affect RBP binding. In Zhou et al., a CNN-based RBP binding prediction model trained using eCLIP data was utilized to predict the effect of noncoding variants on autism spectrum disorders (ASDs), underscoring the utility of RBP prediction in medical genomics178.

Overall, various studies have shown that it is possible to model the binding preferences and binding sites of RBPs accurately (Table 4 and Supplementary Table 3). The performance of RBP prediction models was improved by incorporating sequence context and in vivo structure information, highlighting the importance of non-motif features in RBP binding. Recent breakthroughs in protein structure prediction have enabled accurate prediction of protein‒RNA interactions at the structural level179. Plausibly, integrating protein‒RNA complex structure data with current RBP binding site prediction methods could assist in accurate RBP binding site prediction. Improvements in RBP prediction models will promote the discovery of drug targets and biomarkers since RBP binding is a cardinal component of posttranscriptional and translational gene expression regulation.

Pre-mRNA processing

Pre-mRNA processing is a complex process involving 5′ capping, splicing, and 3′ poly(A). These processes serve as important points of posttranscriptional gene expression regulation, often through alternative splicing and alternative poly(A) (APA)180,181. Dysregulation of pre-mRNA processing can cause various genetic disorders, including muscular dystrophy and progeria182,183. A major task in this domain is the prediction of splicing, which will assist in the discovery of novel disease-associated splicing variants. This task can be further divided into exon usage prediction and splice site prediction. Exon usage prediction involves predicting the exon inclusion rate, termed the percent spliced in (PSI), using predefined exon boundaries184. The sequences and abundances of isoforms produced by alternative splicing inferred from RNA-seq data are commonly used for training and evaluation. Leung et al. were among the first to apply DL for alternative splicing prediction. They utilized MLP to predict tissue-specific alternative splicing from manually extracted genomic sequence features and one-hot encoded tissue types80. In this study, alternative splicing prediction was formulated as a classification task using only three PSI categories—low, medium, and high—and therefore, the predictive capability was limited. In their subsequent work, Xiong et al. regarded the PSI as a continuously distributed variable92. Using this strategy, the authors discovered novel ASD-associated splice variants and inferred the pathogenic mechanism of specific SNVs in Lynch syndrome.

Splice site prediction involves the identification of splice sites from the genomic sequence. SpliceRover employs a CNN motif detector to predict splicing donor and acceptor site probabilities using genomic sequences of 15–402 nucleotides and outperformed previous machine learning methods185. This model was analyzed, and it was found that the model not only detects well-known splice site motifs but also considers additional factors, such as the polypyrimidine tract. SpliceAI adopts dilated convolution kernels to utilize longer sequence contexts of up to 10,000 nucleotides113. The authors showed that capturing long-range contexts improved splicing site prediction by comparing the performances of models with varying input lengths. The SpliceAI prediction score was used to discover a novel type of splicing variant, termed the cryptic splice variant, which creates splicing sites weaker than canonical splice site variants. In contrast to canonical splice variants that exert similar effects across tissues, cryptic splice variants alter splicing in a tissue-specific manner and are associated with intellectual disability and ASD. This example underscores how developing and utilizing DL models can assist novel scientific discoveries in RB.

Predicting poly(A) is another major task for DL in mRNA processing since APA is an essential regulatory mechanism of differential gene expression and is associated with various diseases. Poly(A) position data from PolyA-Seq186 and 3P-seq187 are commonly used to train poly(A) prediction models. DeepPolyA and Leung et al. are initial works that adopted different strategies to utilize DL for poly(A) prediction from genomic sequences112,188. DeepPolyA formulates this task as a binary classification problem between poly(A) sites and non-poly(A) sites, and Leung et al. formulate the task as a regression of the poly(A) site strength. Both models outperformed classical machine learning approaches for predicting poly(A) sites. While these models were trained from an in vivo dataset, a subsequent CNN model, APARENT, utilizes a large in vitro dataset generated by massively parallel reporter assay (MPRA) to represent biological complexity189. APARENT formulated the task as isoform fraction regression and cleavage site distribution prediction. Using APARENT, it was possible to capture several determinants of poly(A) site selection, identify potential pathogenic poly(A)-affecting variants, and design poly(A) signals that produce desired isoforms. The success of APARENT has demonstrated the utility of massive-scale experiments in developing DL models for understanding complex biological processes. Overall, multiple studies have demonstrated the utility of DL in discovering regulatory sequences and context features that determine pre-mRNA processing (Table 5 and Supplementary Table 4).

Gene expression

One fundamental goal in genomics and RB is to model gene expression regulation using computational models. This goal is often formulated as predicting the gene expression level given the genomic sequence190. Solving this task would allow a comprehensive and quantitative analysis of the regulatory functions of noncoding sequences and the prediction of noncoding variant effects in silico. Despite its importance, the complexity of the mechanisms regulating gene expression has impeded conventional machine learning models from solving this task. Consequently, multiple studies have utilized DL to address this fundamental task.

Basenji and ExPecto are pioneering works on this topic105,191. Both are CNN models that predict the expression level of a given genomic sequence. Basenji has a receptive field of 32 kb and utilizes dilated convolution to predict the results of DNase-seq, ChIP-seq, and CAGE jointly. Among them, CAGE corresponds to gene expression. Unlike Basenji, ExPecto uses a sequential approach in which the first module of the model predicts epigenetic markers and TF binding from 40-kb DNA sequences, and the downstream modules predict gene expression levels. The epigenetic module was trained with ENCODE and Roadmap Epigenomics data, while the expression module was trained with CAGE data. Basenji and ExPecto both yielded gene expression level predictions that agreed well with the experimental eQTL data. ExPecto was also utilized to predict disease risk alleles and to predict and prioritize causal genome-wide association study (GWAS) variants. Xpresso is another CNN developed to predict gene expression levels from sequences. Unlike Basenji and ExPecto, Xpresso did not utilize epigenetic data for training and strictly relied on the genomic sequence192. The authors showed that the sequence-only model can perform comparably to previous models using epigenetic features, suggesting that gene expression can be inferred from flat genetic sequences. Moreover, Xpresso exhibits robust generalizability between cell lines and species. Notably, Xpresso was utilized to derive a novel hypothesis that CpGs are enriched in the core promoters of highly expressed genes, potentially expanding the role of CpG in transcriptional regulation and emphasizing that DL models can aid in novel biological discoveries.

In contrast to the models mentioned above, which all use the CNN architecture, Enformer was built with the transformer architecture to effectively capture long-range interactions between regulatory elements132. The input length for this model is 200 kb, which is significantly longer than that of the previous models. This model outputs TF ChIP-seq, histone modification ChIP-seq, DNase-seq, ATAC-seq, and CAGE tracks only from genomic sequences. Enformer outperformed Basenji254 and ExPecto in RNA expression prediction. In addition, this model exhibited robust eQTL variant effect prediction and mutation effect prediction performance. The study verified the benefit of using the attention layer through an ablation study, demonstrating the effectiveness of transformers for processing long biological sequences. Moreover, Enformer showed the possibility of detecting candidate enhancers using attention weights and gradient-based saliency scores. Overall, multiple studies have shown that inferring gene expression solely from genomic sequences is possible using DL. This trend suggests that utilizing longer genomic sequences with an effective architecture would lead to better sequence-based modeling of gene expression.

Although sequence-only approaches have been successful in modeling gene expression, researchers have modeled gene expression based on non-sequence features, which could expand the dimension of gene expression regulation research. D-GEX is an MLP model developed to predict gene expression using the expression levels of 1000 landmark genes, enabling whole-transcriptome profiling via economic Luminex beads83. GEARS is another DL model for learning gene–gene interactions193. In GEARS, the relative change in gene expression upon perturbation of specific genes was predicted using an MLP. DeepChrome is a CNN that predicts gene expression levels from histone modification profiles, and it outperformed classical machine learning models for histone-based expression prediction194. DEcode is a CNN that infers differential gene expression levels from the binding information of three types of regulatory molecules, RBPs, miRNAs, and TFs. Using these input features allowed modeling at both the transcriptional and posttranscriptional regulation levels195. The output of DEcode is a tissue-wide expression profile of each gene, composed of relative gene expression levels in 53 human tissues. The authors analyzed the model to measure the importance of each regulatory molecule in the differential expression for each tissue. The regulatory significance of RBPs, miRNAs, and TFs inferred by analyzing DEcode was validated with in vivo loss-of-function mutation data and disease associations. This example shows how a DL model can prime the production of new biological knowledge.

Translational regulation is another important axis of gene expression regulation that can be probed by ribosome profiling and protein reporter assays. The sequence and structure of the 5′ UTR are the key determinants of translational regulation. Optimus 5-Prime utilized a CNN to predict ribosome load from the 5′ UTR sequence using the MPRA dataset for training196. Optimus 5-Prime accurately predicted the effect of the 5′ UTR sequence on the ribosome load. An analysis of the model revealed that the model can capture translation initiation site (TIS) sequences, stop codons, and non-canonical start codons. The model was also utilized to design a 5′ UTR of desired strength, demonstrating how such DL models can be utilized for synthetic biology. TISnet is a DL model for TIS prediction from primary sequences and RNA structures, the architecture of which was adopted from PrismNet197. The study showed that both sequence and structure contribute to the accurate prediction of the translation initiation probability of a given AUG. By analyzing the downstream regions of predicted start codons, it was hypothesized that the downstream hairpin structure dictates start codon selection. This hypothesis was experimentally validated, providing a critical point for rational protein design.

Overall, several studies have shown that DL can be leveraged to model gene regulatory mechanisms at the transcriptional, posttranscriptional, and translational levels (Table 6 and Supplementary Table 5). These DL models for gene expression modeling have paved the way for in silico identification of pathogenic variants, potential biomarkers, drug targets, and synthetic biology.

Medical applications of RNA biology

Fundamental discoveries in RB have made multiple contributions to medicine, including RNA vaccines198, RNA-targeting drugs199,200, and RNA therapeutics201,202. Numerous studies have also shown that transcriptomic data can be leveraged for disease diagnosis203,204. By replacing or complementing conventional methods, RNA-seq can be utilized for precise diagnosis and to provide personalized treatment strategies. For example, Mayhew et al. aimed to detect acute infection from the expression of 29 marker host genes205. Although it is theoretically possible to diagnose infection using the host transcriptome, this approach has been impeded by the transcriptomic heterogeneity between patients. To overcome this limitation, the authors trained an MLP named IMX-BVN-1 using an infection dataset compiled from GEO and ArrayExpress. The model successfully diagnosed infections in an independent ICU dataset. This example shows that using a predefined set of marker genes allows the efficient development of a diagnostic model. However, the limited availability of comprehensive marker gene sets often obstructs this approach for various diseases. Moreover, utilizing the entire transcriptomic landscape, instead of using only a small fraction of the transcriptome, may unleash the potential of DL. For example, Comitani et al. developed a DL model for pediatric cancer classification from the expression of 18,010 genes and pseudogenes profiled by RNA-seq206. Transcriptional diversity in pediatric tumor tissue has been a major issue in transcriptome-based diagnosis. The authors adopted a self-supervised learning framework to overcome this challenge. First, they developed RACCOON, a framework for unsupervised tumor transcriptome clustering and identification. The tumor subtype hierarchy output by RACCOON was utilized to train OTTER, an ensemble of multiclass classifier CNNs. The accuracy of pediatric cancer type prediction by OTTER was 89%, and the predictions were temporally consistent. These examples underline the capability of DL models to generalize from copious amounts of high-dimensional transcriptomic data to yield clinically valuable predictions.

Multiple studies have developed DL models for clinical diagnosis by integrating transcriptomic data with other modalities, including genomic and proteomic data. This multimodal approach improves performance and generalizability by allowing models to generate more comprehensive representations of the biological states of patients. MOGONET is a multimodal and multiomics GNN for patient classification that integrates DNA methylation, mRNA expression, and miRNA expression profiles207. In MOGONET, separate GNNs generate initial prediction vectors from each of the three inputs, and the tensor product of the three prediction vectors is passed to an MLP for patient classification. MOGONET was validated against an Alzheimer’s disease diagnosis task, a glioma grade classification task, a kidney cancer type classification task, and a breast invasive carcinoma classification task. In addition to direct diagnosis, multimodal DL has also been utilized for biomarker discovery, a crucial component of diagnostic development. As another example, CoraL is a multimodal CNN that predicts disease associations of ncRNA-encoded small peptides (ncPEPs) and their originating short ORFs (sORFs) for cancer biomarker discovery (59). The model was trained with meta-learning and demonstrated the generalizability of DL-powered cancer biomarker discovery across various types of cancers. HE2RNA is another multimodal DL model for clinical RB that predicts the gene expression profile from histology images208. HE2RNA is an MLP that generates a patch-level transcriptomic representation from whole-slide images of tumor tissues. The gene expression levels were obtained from the TCGA database. In addition to predicting gene expression levels, HE2RNA can also generate spatial gene expression maps and predict microsatellite instability via transfer learning. These examples show that transcriptomic data can be integrated with other data modalities through DL to produce medical predictions.

In addition to diagnosis, transcriptomic data have also been leveraged for prognosis prediction via DL. For example, Qiu et al. utilized RNA-seq data from TCGA to train an MLP that predicts patient survival from the expression level of 17,176 genes59. The authors adopted a meta-learning strategy to learn the parameter initialization, which allowed a few-shot training of the final model. This study demonstrated the utility of meta-learning for the few-shot survival prediction task by comparing it with regular pre-training and combined learning. Moreover, the feasibility of few-shot learning in transcriptome-based prognosis prediction was validated by demonstrating that the performance of the few-shot model was comparable to that of a many-shot model.

The success of mRNA vaccines amid the COVID-19 pandemic209 has drawn the attention of the pharmaceutical industry to RNA-based drugs. Beyond COVID-19 vaccines, RNA-based drugs offer promising therapeutic potential for preventing infections and treating various diseases, including cancer, cardiovascular diseases, and neurodegenerative diseases210. DL has also been utilized for RNA-based drug research since it has been widely used in pharmaceuticals to aid in drug target discovery and drug design211,212. A central task in developing effective mRNA-based drugs is to improve their stability, which is often a limiting factor in the global distribution of mRNA vaccines. Stanford OpenVaccine is a crowdsourced effort to develop a DL model that accurately predicts the stability of an arbitrary mRNA molecule213. The project was hosted on Kaggle, a Google platform for public DL competitions. The best solution outperformed previous machine learning models using data augmentation and ensemble214.

Gene editing, which has recently been approved for clinical use215, is another major field in RNA-based drug research. Several DL methods have been developed for CRISPR‒Cas editing systems, focusing on tasks such as sgRNA optimization and editing outcome prediction. DeepCRISPR utilizes unsupervised representation learning to train a denoising CNN that learns the representation of sgRNAs from sequence and epigenetic features216. The model was fine-tuned to predict on- and off-target effect profiles of sgRNA in the CRISPR-SpCas9 knockout system. CRISPRon exhibited robust performance in gRNA efficiency prediction by training a CNN that uses protospacer and protospacer adjacent motif (PAM) sequences as input217. For CRISPR-based base editing, BE-DICT, a transformer-based encoder–decoder model, was developed to predict the probabilistic outcomes of CRISPR-SpCas9 base editors given the protospacer sequence218. In addition to CRISPR-Cas9, DL-based guide RNA optimization tools have been developed for CRISPR-Cas13d and prime editing219,220. Similarly, RNAi drugs such as short hairpin RNAs (shRNAs) have been employed in RNA therapeutics. The mechanism of shRNA drugs is based on the repression of target gene expression through complementary base pairing between the shRNA and its target mRNA. Embedding shRNAs into miRNA backbones greatly increases the degree of target gene repression due to the utilization of the endogenous miRNA processing pathway, and these miRNA-embedded shRNAs are referred to as shRNAmirs. Predicting the targeting efficacy of shRNAmirs remains a key step in designing RNAi drugs based on shRNAmirs. In this context, deep learning models that predict the efficacy of shRNAmirs have been developed, and one CNN-based model named shRNAI+ has achieved robust performance by using sequence and context features221. These studies suggest that DL can be utilized for designing and optimizing RNA-based therapeutics.

Another important avenue in medical applications of RNA is RNA-targeting drugs. Gao et al. utilized DL to discover therapeutic targets for the splice-correcting drug BPN-15477222. They first selected potentially pathogenic splicing-altering mutations as candidate targets using SpliceAI. To identify drug targets among the candidates, they developed a CNN that predicts drug-induced changes in PSI, given the exon‒intron boundary sequences. Several targets predicted by the CNN were experimentally validated, providing potential therapeutic strategies for diseases, including Lynch syndrome, cystic fibrosis, and Wolman disease. Overall, numerous studies have demonstrated the merit of DL in various medicine-related RB tasks, positioning DL as a core asset in personalized and precision medicine (Table 7 and Supplementary Table 6).

Desiderata for employing deep learning for RNA biology

Numerous examples of successful DL applications in RB demonstrate the competence of DL in elucidating the mechanism of biological processes from large-scale experimental data. Yet, significant challenges remain. First, the imperfect metadata integrity of databases and the scarcity of independent benchmarks hinder the training of effective DL models. Indeed, most DL models in RB have not reached expert-level or experiment-level performance. Second, the difficulty in understanding the prediction of models often impedes the achievement of biological discoveries via DL. Third, the models should be able to integrate diverse modalities of RB data to gain a systematic understanding of the transcriptome. Nevertheless, researchers are actively addressing these challenges. The collective efforts of institutions and laboratories are improving the availability and quality of public RB data. Researchers are adopting novel techniques and integrating multiple modalities to construct models that can perform a wider range of tasks than previous models. In this section, we review the desiderata for exploiting the full potential of DL for RB research, together with prominent DL techniques and efforts to meet the challenges (Fig. 4).

First column: Integrating multimodal transcriptomic data and constructing well-curated databases are prerequisites for developing effective DL models for RB. Unified analysis pipelines and controlled metadata are desirable properties of public RB databases. Second column: Foundation models for RB can be pre-trained with genomic and transcriptomic corpora, functional genomics data, and gene expression data. These models can learn the generalizable knowledge of genetic systems and language. Employing techniques for enhancing the computational efficiency and interpretability of DL models would further improve the utility of DL in RB. Third column: The RB foundation model can perform a wide range of downstream tasks after fine-tuning, including comprehensive network analysis of the posttranscriptional regulatory network, genome-wide prediction of functional elements, and transcriptome-based precision medicine tasks. Fourth column: The released DL model should comply with safety standards, which can be achieved by ensuring fair representation of the population and protecting the privacy of study participants in the training dataset. Moreover, the released model should be benchmarked using standardized pre-compiled datasets. Parts of this figure were created with BioRender.com.

Multidimensional and well-curated databases